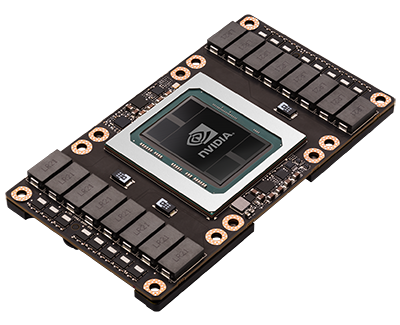

We recently released RealityServer 4.4 build 1527.93. This update included Iray 2016.2 and some interesting new features. While still an incremental update, the big item many of our customers have been asking for is finally here, NVIDIA Pascal architecture support. So your Tesla P100, Quadro P6000, Quadro P5000, GeForce GTX TITAN X, GeForce GTX 1080, 1070 and other Pascal cards will now work with RealityServer. Keep reading for some more details of the new features in update 93 of RealityServer.

This release does not yet expose all of the new functionality of the underlying Iray 2016.2 release. We wanted to push out a release with basic Iray 2016.2 integration as quickly as possible though since there was so much interest in the Pascal support. The next RealityServer release will contain a great new feature that allows rendering of stereo images (including 360 degree VR images) in a single pass (right now you need to render the left and right eye separately). For now, let’s look at what is in this update.

Cards using the NVIDIA Pascal GPU architecture have been out for a little while now. With the update to Iray 2016.2 in this release of RealityServer, Pascal is finally supported. This includes the Tesla P100, Quadro P6000 and P5000 as well as the consumer GeForce GTX TITAN X (confusingly the Pascal version has the same name as the Maxwell one), GeForce GTX 1080, 1070, 1060 and more. We have been doing a little benchmarking and the results are impressive. New cards are still being released in both the professional and consumer range, so expect to see updates to our benchmarks as we get access to hardware for testing. In the meantime we have included a previous from some rather unscientific testing we did below.

In addition to Pascal support, several aspects of Iray sampling performance have also been improved for some scenes. Note that the various sampling algorithm changes mean that you cannot compare benchmark numbers from this release of RealityServer with previous versions directly since 1 iteration now takes longer but produces a better result in that time. See this article on the NVIDIA Iray developer blog for more details. In any event, here are our initial results on testing consumer cards.

Megapaths/sec

We definitely have to do a lot more rigorous testing but it’s looking like cards at the same tier in the Pascal generation are performing about 1.4x – 1.5x faster than their equivalent card from the Maxwell generation. The pricing and power draw of Pascal is also very attracting, really driving some great price / performance characteristics.

One addition note, this release now uses CUDA 8.0 and as such requires the very latest drivers for your GPU. Make sure you update before you try and run this update of RealityServer.

A previous update quietly rolled out MDL aperture functions, however while these are very flexible they need some knowledge to setup and use. This release adds some really simple settings to help control the look of the bokeh in the out of focus regions of your image while using depth of field. Three new attributes have been added to the camera, mip_aperture_num_blades, mip_aperture_blade_rotation_angle and mip_lens_radial_bias. When the first attribute is greater than 2, the blade shaped aperture is activated and it can then be rotated with the mip_aperture_blade_rotation_angle attribute.

To illustrate, we knocked up a quick scene with a bit of scripting to create a random set of square area light sources and setup depth of field to throw them out of focus. Below you can see the effect of varying the number of blades.

This can be great when trying to simulate a specific type of lens easily without the need to create aperture maps or functions. You get even more creative with the bokeh in your images by adjusting the mip_lens_radial_bias attribute. This defaults to 0.5, increasing the value towards 1.0 concentrates the energy towards the center of the aperture, reducing towards 0.0 focuses the energy towards the outside, creating rings. Here are some examples.

These settings are just attributes on your camera so you can easily set them with the element_set_attribute or element_set_attributes command. Combined with lens distortion features previously introduced into Iray you can simulate almost any real-world lens and camera combination to get just the result you need.

There used to be a limit on the number of sections planes you could create of 16. This has now been removed and you can create as many section planes as you like. Additionally NVIDIA snuck in a handy new feature a few users had been asking us about, the near clipping plane of the camera can now be used, and reflections and indirect lighting does not reveal the clipped geometry. This can be really useful for cases where you need to position a camera outside a scene but look inside.

To enable this feature you need to set the mip_use_camera_near_plane attribute on your camera, after which the near (hither) clip plane on your camera will be honoured. You can set the near clip plane with the camera_get_clip_min command. Here’s a quick example.

Interior scene using near plane clipping

In this image the camera is actually positioned outside the room and the wall is clipped away using the near clipping plane of the camera. While this can also be achieved with section planes, to do so you would need to compute the correct orientation and position of the plane from the camera, with this feature the clipping plane will move with your camera. Also there are issues using section planes for this due to the way they handle indirect light paths.

As with most jumps in Iray versions, we automatically inherit a lot of fixes and optimisations made by NVIDIA. Memory usage in certain situations and various fixes for scenes with poor performance have been added. You can see more detail in the neurayrelnotes.pdf file included with the release.

One other item of note is that the deprecated MetaSL support has now been fully removed from RealityServer, so if you were relying on this older shader language technology you will have to migrate to MDL before using this release.

There is also a new option for Iray Interactive, irt_fast_convergence_start which optimises Iray Interactive for convergence rather than interactivity. This will be useful for users who are using Iray Interactive as a final frame renderer.

We’d love to hear about your experiences with the new Pascal based hardware and how you find the performance. From what we have seen it looks to be very promising. Please get in touch if you have questions or want to share some of your findings.

Paul Arden has worked in the Computer Graphics industry for over 20 years, co-founding the architectural visualisation practice Luminova out of university before moving to mental images and NVIDIA to manage the Cloud-based rendering solution, RealityServer, now managed by migenius where Paul serves as CEO.